Hi

Goals

- Get hands-on experience running MapReduce and gain a deeper understanding of the MapReduce paradigm.

- Become more familiar with Apache Spark and get hands on experience with running Spark on a local installation.

- Learn how to apply the MapReduce paradigm to Spark by implementing certain algorithms in Spark.

The Lab Files

Copy the lab files from the instructional servers to your lab account with

$ cp -r ~cs61c/labs/12/ ~/labs/12/

Alternatively, secure-copy (scp) them from the instructional servers to your own laptop with

$ scp -r cs61c-xxx@hive10.cs.berkeley.edu:~cs61c/labs/12/ ~/YOUR_FILEPATH

And if you want to secure-copy them back to your class account:

$ scp -r ~/YOUR_FILEPATH/12 cs61c-xxx@hive10.cs.berkeley.edu:~/YOUR_LABACCT_FILEPATH

Background Information

In lecture we've exposed you to cluster computing (in particular, the MapReduce framework), how it is set up and executed, but now it's time to get some hands-on experience running programs with a cluster computing framework!

In this lab, we will be introuducing you to a cluster computing framework called Spark. Spark was developed right here at Berkeley before being donated to the Apache Software Foundation in 2013. We will be writing Python code to run in Spark to give us some practice in writing Map and Reduce routines.

Spark has it's own website, so you are free to try to install it onto your local machines, although it may be easier to ssh into the lab computers to complete this lab.

Avoid Global Variables

When using Spark, avoid using global variables! This defeats the purpose of having multiple tasks running in parallel and creates a bottleneck when multiple tasks try to access the same global variable. As a result, most algorithms will be implemented without the use of global variables.

How to run Spark via the command line

For this lab we will be providing you all with a Makefile that will help you run your Spark files, but should you create your own new files (or use Spark outside of this class, which you should!), you will need to know how to run Spark via the command line. For our version of Spark (which is 1.1.0), in order to run your Spark file xxx.py (similar to how you run your Python files with python xxx.py), you just run the following command:

$ spark-submit xxx.py # Runs the Spark file xxx.py

If your Spark file takes in arguments (much like the Spark files we have provided), the command will be similar, but you will instead add however any arguments that you need, like so:

$ spark-submit xxx.py arg1 arg2 # Runs the Spark file xxx.py and passes in arg1 and arg2 to xxx.py

Spark also includes this neat interpreter that runs with Python 2.7.3 and will let you test out any of your Spark commands right in the interpreter! The interpreter also takes in files (pass in the file with --py-files flag) and will load your file in the same directory as the executable. If you are looking to just run the interpreter, the command is as follows:

$ pyspark # Runs the Spark interpreter. Feel free to test stuff out here!

If you want to preload some files (say a.py, b.py, c.py), you can run the following command:

$ pyspark --py-files a.py, b.py, c.py # Runs the Spark interpreter and you can now import stuff from a, b, and c!

Spark Debugging Quick-tips

If you ever find yourself wondering why your output is strange or something breaks when you run your Spark files, remember these few quick-tips!

- Make use of the take function! The take function can be run on any RDD object (so any object you are trying to parallelize or have run any transformation / action functions on. You will use these later). This function takes in one argument num, which is an integer and it will return back to you the first num elements inside of your RDD object. For more information about this, check out the documentation on take.

- You can also test out your functions (map, reduce, etc) inside of the Spark interpreter (pyspark, mentioned above). Simply import the function you want to test out in pyspark (explained above) and you will be able to run this function and check if the output is what you expected! Here is a short example from wordcount.py:

$ pyspark --py-files wordcount.py # Run the pyspark interpreter with the wordcount.py file in the executable's directory >>> from wordcount import flat_map # Import the function you want to test out, in this case, flat_map >>> file = sc.sequenceFile("/home/ff/cs61c/data/billOfRights.txt.seq") # Load up the sequence file billOfRights.txt.seq >>> file.take(5) # Returns back to you the first 5 elements in billOfRights.txt.seq [(<doc_name_1>, <text 1>), (<doc_name_2>, <text 2>), ..., (<doc_name_5>, <text 5>)] >>> flat_map_output = file.flatMap(flat_map) # Run the imported function flat_map on the file >>> flat_map_output.take(5) # Return back the first 5 words in your document. [u'Amendment', u'I', u'Congress', u'shall', u'make']

Documentation, and Additional Resources

- A quickstart programming guide for Spark (click the Python tab to see the Python code) is available here!

- The version of Spark we will be using will be 1.1.0 and the link to the API documentation is available here (Note that the docs say version 1.6.2, but the versions are compatible).

Exercises

The following exercises use three different sample input files, two of which are provided by the staff and can be found in ~cs61c/data:

- billOfRights.txt.seq -- the 10 Amendments split into separate documents (a very small input)

- complete-works-mark-twain.txt.seq -- The Complete Works of Mark Twain (a medium-sized input)

You don't need to copy these files over to your account. Their locations are already setup for you in the Makefile.

Notice the .seq extension, which signifies a sequence file that is readable by Spark. These are NOT human-readable. Spark supports other input formats, but you will not need to worry about that for this lab. To get a sense of the texts you'll be using, simply drop the .seq portion to view the text file (i.e. ~cs61c/data/billOfRights.txt).

Although an exercise may not explicitly ask you to use it, we recommend testing your code on the billOfRights data set first in order to verify correct behavior and help you debug.

We recommend deleting output directories when you have completed the lab, so you don't run out of your 500MB of disk quota. You can do this by running:

$ make destroy-all

Please be careful with this command as it will delete all outputs generated in this lab.

Exercise 0: Generating an Input File for Spark

For this exercise you will need the Makefile and Importer.java. In this lab, we'll be working heavily with textual data. We have some pre-generated datasets as indicated above, but it's always more fun to use a dataset that you find interesting. This section of the lab will walk you through generating your own dataset using works from Project Gutenberg (a database of public-domain literary works).

Step 1: Head over to Project Gutenberg, pick a work of your choosing, and download the "Plain Text UTF-8" version into your lab directory.

Step 2: Open up the file you downloaded in your favorite text editor and insert "---END.OF.DOCUMENT---" (without the quotes) by itself on a new line wherever you want Spark to split the input file into separate (key, value) pairs. The importer we're using will assign an arbitrary key (like "doc_xyz") and the value will be the contents of our input file between two "---END.OF.DOCUMENT---" markers. You'll want to break the work into reasonably-sized chunks, but don't spend too much time on this part (chapters/sections within a single work or individual works in a body of works are good splitting points).

Hint: A short work that has 3 clearly defined sections is Franz Kafka's Metamorphosis.

Step 3: Now, we're going to run our Importer to generate a .seq file that we can pass into the Spark programs we'll write. The importer is actually a MapReduce program, written using Hadoop! You can take a look at Importer.java if you want, but the implementation details aren't important for this part of the lab. You can generate your input file like so:

$ make generate-input myinput=YOUR_FILE_FROM_STEP_2.txt

Dang that's a lot of command line garbage. It's OK don't worry. As long as a file was generated in the "convertedOut" directory, it worked as it was supposed to.

A fact that should help clarify some stuff:

Throughout the rest of this lab, you'll be able to run the mapreduce programs we write using make commands.

The make commands will be of the form make PROGRAMNAME-INPUTSIZE

For example, if we're running

the "wordcount" program on a "small" input (AKA the Bill of Rights), the make command would be:

make wordcount-small.

If you wish to try out the "wordcount" program on the input file you generated here, you can instead run:

$ make wordcount myinput=YOUR_SEQ_FILE_FROM_STEP_3.txt.seq # Output in wc-out-wordcount/ directory

DANG that's even MORE command line garbage. It's OK no worries. As long as a file called part-00000 was generated in the directory "wc-out-wordcount" along with an empty _SUCCESS file, everything worked as it was supposed to.

Exercise 1: Running Word Count

POP QUIZ: WHAT IS AN RDD? If you don't know, make sure you know at least where to find one in the code for Exercise 1 before you start Exercise 2.

For this exercise you will need the Makefile and already-completed wordcount.py. You can run it on our desired input using a convenient make command:

$ make wordcount-small

This will run wordcount.py over billOfRights.txt.seq. Your output should be visible in wc-out-wordcount-small/part-00000.

Task: Take a look at wordcount.py. You'll be implementing two other files which are very similar to this one, so get a good understanding of what's going on.

Note: Different exercises need to be solved by reconsidering how the functions map(), flat_map() and reduce() are implemented and called and in which order. Keep this in mind.

Note also that map(), flat_map(), and reduce() are user-defined functions while flatMap, map (the one called by dot notation), and reduceByKey are built-in functions for Spark.

Hint: Read the comments in wordcount.py to get a better idea of what is going on!

Next, try out wordcount.py on the larger input file complete-works-mark-twain.txt.seq. One interesting feature of Spark is that it is an in-memory distributed computing engine, so it has no default file storage. Instead, we use Hadoop Distributed File System (HDFS) to help store our output. You are welcome to read more about Hadoop on your own. In general, Hadoop requires that the output directory not exist when a MapReduce job is executed, however our Makefile takes care of this by removing our old output directory.

$ make wordcount-medium

Your output for this command will be located in the wc-out-wordcount-medium directory. Search through the file for a word like "the" to get a better understanding of the output.

Checkoff [1/3]

- Explain how wordcount.py counts words to the person checking you off.

Exercise 2: Document Word Count

Now it's time to actually code some stuff in Spark.

Open docwordcount.py.

Notice that it currently contains the same code as wordcount.py, which you just tried for yourself.

Your task: Modify it to count the number of documents containing each word rather than the number of times each word occurs in the input.

To help you with understanding the code, we have added some comments, but feel free to check out transformations and actions on the Spark website for a more detailed explanation on some of the methods that can be used in Spark.

In this part, you may find it useful to look at the transformations and actions link provided above, as there are methods that you can use to help sort an output or remove duplicate items.

Hint: Look at the distinct() transformation!

Hint2: Our current flat_map function disregards document[0], which is the document ID number.

If we want to count the number of distinct documents that contain a given word, we might need to distinguish words in document X from words in

document Y. Thus, you may find it helpful to make use of the document ID in flat_map()...

WARNING: You may NOT use the set() built-in Python function to convert lists to sets! Although there's a common valid solution that students come up with using this function, it kinda defeats the purpose of using MapReduce to solve this problem. Finding and eliminating the duplicates is much less efficient using this method when the documents are large. Using the .distinct() transformation becomes a much more suitable approach.

Task: Finally, make sure the output is sorted in alphabetical order. (Hint: Is there another transformation you can use?)

You can test docwordcount.py using either of the following (for our two data sets):

$ make docwordcount-small # Output in wc-out-docwordcount-small/

OR

$ make docwordcount-medium # Output in wc-out-wordcount-medium/

Checkoff [2/3]

- Explain your modifications to docwordcount.py to your TA.

- Show your output for billOfRights (aka the output for running make docwordcount-small). In particular, what values did you get for "Amendment", "the", and "arms"? Do these values make sense?

Exercise 2.5: Let's take a quick break, shall we?

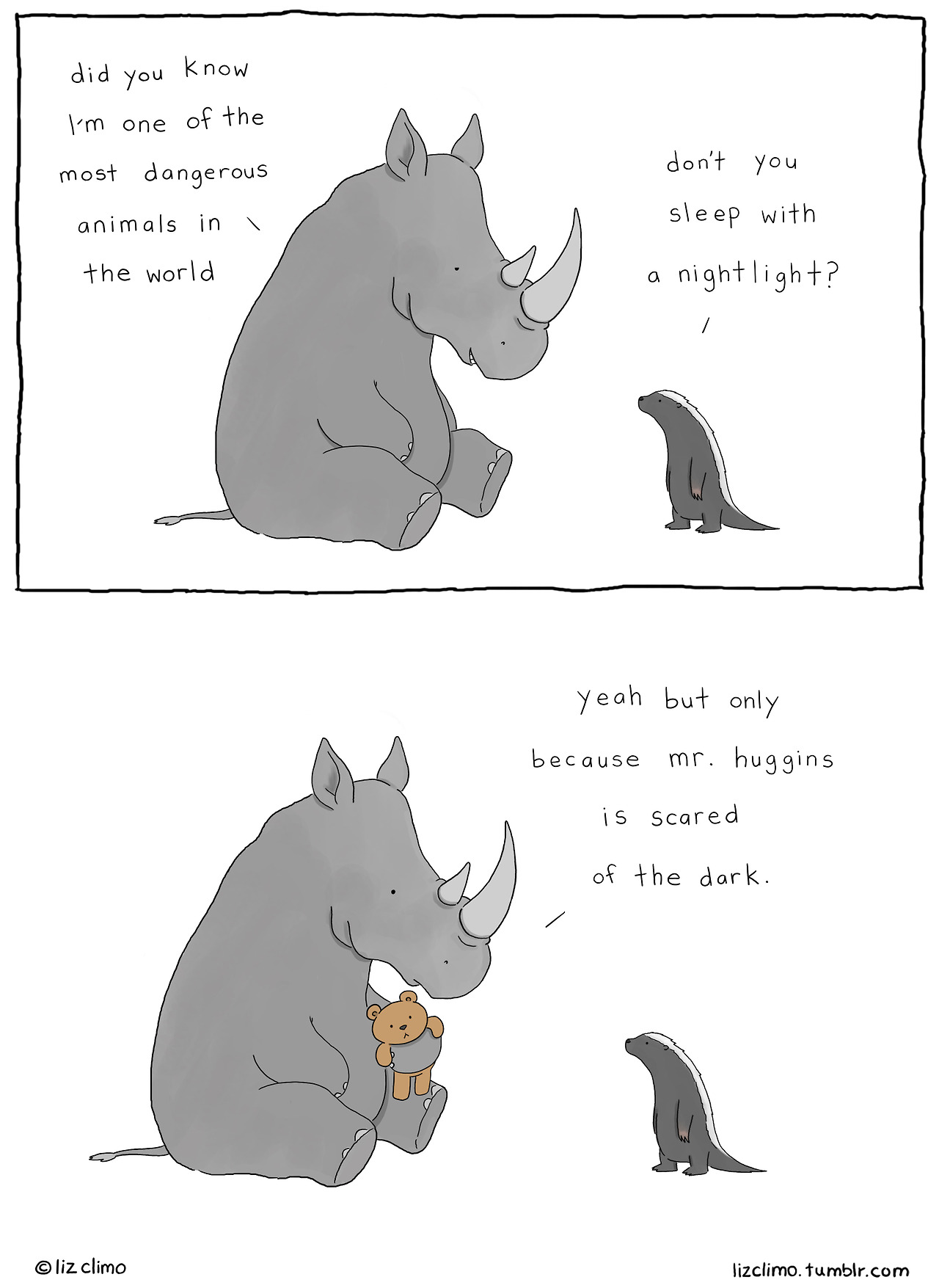

Here's today's Liz Climo comic:

I wonder how she would react to her comics being featured on a UC Berkeley CS course website...

Wait, is this copyright infringement/illegal?

Let me tell you a story.

When I was a little kid, my parents expected me to practice playing the piano every night. I would have lessons with my piano teacher once a week, and for any given week, I had a set of assigned pieces to practice and polish. My parents wanted me to practice each of my assigned pieces at least once per day.One day, (I can't remember exactly why), school was cancelled, and I stayed at home for the entire day, babysat by my grandma. Before my parents left for work, they told me to practice piano during the day since I was going to have so much time. Fact: back then, I did not enjoy practicing at all. So, being the bright and sneaky little sneaker I was, I decided to pretend that I had practiced. How would they know?

At about noontime, my dad called home to check up on me. Incidentally, the landline phone we had back then didn't display caller IDs, so I was always nervous to pick up a call. What if it wasn't from my mom or dad? I somehow figured out that if you let the call go to voicemail, you could hear the caller "leave a message after the beep, thank you." If you picked up the phone while the caller was leaving the message, you could still talk to the caller normally, so I would always do this to make sure I didn't pick up a call from anyone who wasn't my mom or dad. Where was I? Oh yeah. Of course, when my dad called, he asked if I had practiced piano like he told me to.

"Yeah!" came my lie. "I even practiced all the pieces twice!"

Hmmm...I wanted to finish this story so I could have some kind of life lesson here, but I feel like it's getting too long, so I'll finish it next time (Thursday's lab).

Checkoff [2.5/3]

- Guess how the story ends!

Exercise 3: Full Text Index Creation

Last exercise!

Open index.py. Notice that the code is similar to docwordcount.py.

Task: Modify it to output every word and a list of locations (document identifier followed by the word index of EACH time that word appears in that document).

- Make sure your word indices start at zero.

- There should be a new line for every unique (word, document) pair (see below).

(word1 document1-id, word# word# ...) (word1 document2-id, word# word# ...) . . . (word2 document1-id, word# word# ...) (word2 document3-id, word# word# ...) . . .

Let me say that again: Notice that there will be a line of output for EACH document in which that word appears and EACH word-and-document pair should only have ONE list of indices. Remember that you need to also keep track of the document ID as well.

Psst... For this exercise, you may not need all the functions we have provided. If a function is not used, feel free to remove the method that is trying to call it. Make sure your output for this is sorted as well (just like in the previous exercise).

You can test index by using either of the following commands (for our two data sets):

$ make index-small # Output in wc-out-index-small/

OR

$ make index-medium # Output in wc-out-index-medium/

The output from running make index-medium will be a large file. In order to more easily look at its contents, you can use the commands cat, head, more, and grep:

$ head -25 OUTPUTFILE # view the first 25 lines of output $ cat OUTPUTFILE | more # scroll through output one screen at a time (use Space) $ cat OUTPUTFILE | grep the # output only lines containing 'the' (case-sensitive)

Make sure to verify your output. Open complete-works-mark-twain.txt and pick a few words. Manually count a few of their word indices and make sure they all appear in your output file.

Checkoff [3/3]

- Explain your code in index.py to your TA.

- Show your TA the first page of your output for the word "Mark" in complete-works-mark-twain.txt.seq to verify correct output. You can do this by running: cat wc-out-index-medium/part-00000 | grep Mark | less